Major challenges and obstacles; and their workarounds

Migrating from AWS GovCloud to AWS Commercial

June 19, 2024

Overview

The United States Department of State (DOS) protects and promotes U.S. security, prosperity and democratic values across the world. Alpha Omega is engaged with DOS on multiple fronts including programs that advance America’s public diplomacy efforts through the cultural, academic, and artistic experience exchange programs. One such engagement involves cloud modernization and optimization in support of public diplomacy programs and analytics.

Our optimization efforts have brought us a unique challenge of migration out of AWS GovCloud and into the AWS Commercial Cloud. There are many playbooks to migrate infrastructure from on-prem to AWS commercial cloud and then subset of them also provide guidance on migrating to AWS GovCloud from On-Prem or Commercial enclave. On the surface the task seemed straightforward migration given AWS Commercial cloud is less restrictive, more services, and provides many tools for migration compared to AWS GovCloud. But the restrictive nature of GovCloud makes the migration-out little more complex than any other type, especially when the documentation surrounding it is sparse.

With help from our Cloud Center of Excellence (CCOP), Alpha Omega’s cloud team at DOS jumped forth into this challenge armed with CCOP knowledge-base, finding resolutions to many gotchas and pitfalls avoiding negative impact on schedule and costs. A typical application in cloud uses more than 30 services whereas federal enterprise application can use up to 100+services. To maintain brevity and readability, this document only covers the migration and strategies to avoid pitfalls for the most prominent and common AWS services.

What is GovCloud? – The back story

AWS GovCloud exists as a separate isolated partition of the AWS cloud environment. It is designed to meet very stringent requirements set forth by the US government to be used for sensitive workloads. These regulatory requirements include meeting the standards for FedRAMP High, DoD Impact Levels 4 and 5, as well as International Traffic in Arms Regulations (ITAR) and the Export Administration Regulations (EAR). Physical and logical access to GovCloud for AWS personnel is restricted to properly vetted US citizens only.

There are some administrative and practical differences between AWS GovCloud, and AWS’ commercial offering. First, there are only two regions available, which reside in prominent US locations only. These regions are not accessible from within commercial AWS regions and vice versa, they are separate entities. All account information, credentials, service endpoints, etc. are unique to the GovCloud partition. Additionally, not all services found in a commercial account are available in GovCloud, or always implemented exactly the same. All services must go through the proper government vetting and approval processes. Some of these services don’t ever get approved, and some get implemented a bit differently than they are in the commercial partition.

If a workload only requires a FedRAMP Moderate level or lower and does not need to communicate or share data with systems at a higher level, consideration should be given to choosing the AWS Commercial environment over the GovCloud environment. The four East and West regions of the commercial partition have received a Provisional Authorization to Operate (ATO) at the Moderate Impact Level. More services get FedRAMP approved, and approved faster, in the AWS Commercial environment. Many of these services allow for a faster and higher level of innovation.

Due to the hard separation of partitions, some differences in services and the fact that it is meant to be more difficult to get data out of GovCloud, it can be very difficult to move back to the AWS commercial partition once you’re already in GovCloud. While there are many articles out there about how to migrate into GovCloud, there isn’t much information about how to get back out of it.

So, what do you do if workloads have already been into AWS GovCloud and there is a desire or need to move them to AWS commercial? What are some of the challenges you might face when attempting to migrate resources over? We will attempt to address the most major of these differences and challenges throughout the rest of this paper.

Migrating the major services

One of the great things about the Cloud is the ability to repeatably create resources from scripts, templates, or code. The vast majority of the AWS resources can be turned into templates and be re-created in a new account using Infrastructure as Code (IaC). Native AWS CloudFormation templates, or a third-party utility such as Terraform can be used for this process. There are, however, a few major services and features that will require a bit more thought and work when migrating from AWS GovCloud to AWS Commercial Cloud.

VPCs and Networking Migration Challenges and Solutions

At the heart of a cloud environment is your Virtual Private Cloud, or VPC, which is your “virtual data center” that networks all your resources together. While VPCs are functionally the same in each partition, there are challenges that exist in getting the two in communication with each other. In either the Commercial or Government partitions, VPCs in the same or different accounts can be linked together using either VPC Peering, or AWS Transit Gateway, allowing the resources in each VPC to communicate with each other. Unfortunately, there is no way to Peer VPCs in GovCloud accounts with those in Commercial Cloud accounts.

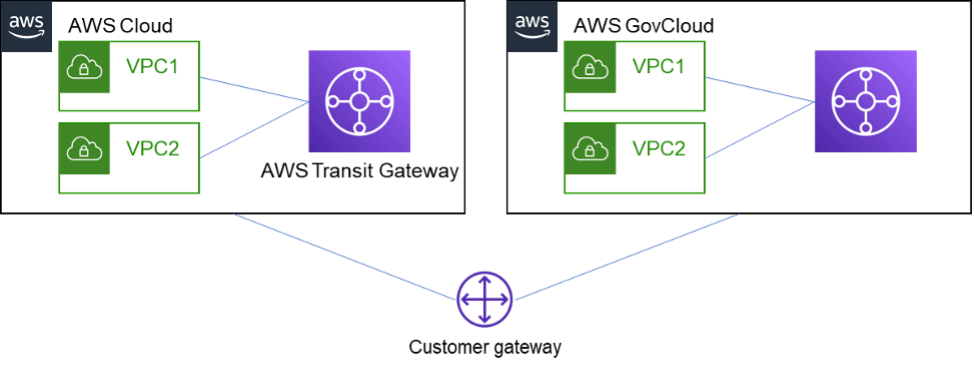

The first option is to create a VPN connection between the two VPCs. This can be done one of two ways. One end of the VPN can use the AWS Site-to-Site VPN, while the other uses a VPN software appliance, such as a Cisco ASA. There is a caveat to this approach though, the AWS Site-to-Site VPN in GovCloud requires that your VPN device supports IKEv1 or 2 with DH-Group 14. The other way, which avoids this requirement, is to use a VPN appliance in both accounts. This gives a little more flexibility, especially if you already have support or software contracts with a particular network vendor. The advantage of using the AWS VPN, if used in conjunction with Transit Gateway, is that it will allow multiple VPCs to connect using a single VPN connection.

The next option, if you have an OnPrem environment, is to establish a VPN or Direct Connect between each account and your OnPrem network. This option is especially useful (or necessary) if Cloud resources need to communicate with OnPrem resources. If a VPN device is used that can perform transitive routing (such as a Cisco ASA), and AWS Site-to-Site VPN with Transit Gateway on each account as well, then resources in both cloud accounts and OnPrem resources can all communicate with each other (As depicted in the following diagram). How VPC connectivity is addressed will affect what methods must be used to migrate the rest of the cloud resources.

RDS Databases Migration Challenges and Solutions

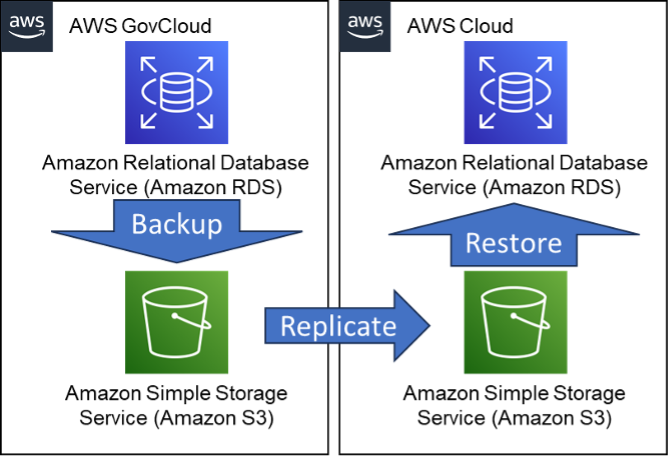

Normally, when migrating between RDS instances and clusters within the same partition, you can simply share an RDS snapshot with another account and restore that snapshot to a new database. Unfortunately, that is not the case when migration from GovCloud to Commercial cloud. Alternative methods will need to be used to get your data move, and the methods available depend on what database engine your RDS is running.

The first method available, and the most straightforward one, is to export a snapshot to an S3 bucket, and then copy that snapshot from there (the S3 copy will be addressed later). This feature is available for MariaDB, MySQL, and PostgreSQL database engines. Once the snapshot is copied, you would then simply use the “Restore from Snapshot” option. This method is the most straightforward and easy to execute and requires no DBA experiences for the migration.

The next method available is one that allows Oracle to interact with S3. The “S3_INTEGRATION” option needs to be added to the DB Option group for the Oracle RDS instance, and the “rds-s3-integration-role” added to the database instance. Sqlplus can then be used by an Oracle administrator to export the data in the database to the S3 bucket. The exports are then copied to the new account and can then be loaded into the new database instances using sqlplus and the S3_INTEGRATION option in the new account.

The third method is one that applies to SQL Server RDS instances. This option is like the one for Oracle, the “SQLSERVER_BACKUP_RESTORE” option needs to be added to the DB Option group for the database. Additionally, an IAM role needs to be applied to the instance that allows the instances access to the S3 bucket or buckets that will be used for backup. After these two options are applied to the instance, management tools such as SQL Management Studio or Cloudbasic can be used to write a backup of the database to S3. Once again, the data in S3 is then copied to the new account, and the same options used to then restore the data into the new instance.

The last option available depends on a VPN connection being established. If connectivity has been established between the accounts, then data can be read, copied, or replicated at a database level with standard client connectivity. This option will have a heavy reliance on a database administrator establishing connectivity and performing the data copies.

EC2 Instance Migration challenges and Solutions

In AWS, these EC2 instances can normally be migrated between accounts by directly sharing snapshots and machine images between accounts. Unfortunately, this can’t be done when the two accounts are in the two separate AWS partitions. Some extra steps must be taken to get these machines copied over.

There are several steps that will need to be taken to get EC2 instances copied over to the new account. The first thing to consider is that a standalone snapshot cannot be easily moved (there are ways, using another instance and a temporary volume, which is out of scope for this writing). What will need to be done first is an AMI image of the machine will need to be created first. One thing to note, be sure to create a copy of the AMI with unencrypted volumes, as KMS keys also cannot be shared across partitions (Be sure to disable “Always encrypt new EBS volumes” for the region in your account before trying to create the unencrypted copy). Once there is an AMI, the CreateStoreImageTask CLI command can then be used to export the AMI into an object in a designated S3 bucket. Once the AMI is in an S3 bucket that the new account user has access to, the CreateRestoreImageTask can be used to register the AMI into the new account from S3. From here, a new machine can be created from that AMI. If the whole machine does not need to be restored, the registration of the AMI will also register the EBS snapshots contained within the AMI into the new account as well.

These commands do have some hard limitations that cannot be changed. The CreateStoreImageTask command has a limit of 600GB queued up. This means, for example, that if you have a 700GB instance being stored to S3, another job cannot be kicked off until this one finishes. Conversely, the CreateRestoreImageTask has a similar limitation of 300GB being queued. If a migration is time sensitive to downtime, this limitation must be considered while planning.

S3 Buckets Migration Challenges and Solutions

All the different data copies that we have discussed so far end up right here, in the S3 bucket. This flexible storage offering from AWS is the easiest place to land any objects that need to be copied over to another account. Since IAM is separated between partitions, S3 buckets cannot be shared at an account IAM level. Some thought and care must be taken about the method used to copy the data to the new account.

The first method is one that relies upon the VPN being in place. This access method is exactly how it sounds, the S3 bucket is accessed through the VPN, maintaining fully private and secure access to the data in the bucket. The traffic on the other end of the tunnel would use an S3 VPC endpoint located within the GovCloud account to access the data securely. The bucket policy would need to allow for the source address on the Commercial Cloud account to have access to the data.

The next method to copy the data in the S3 bucket is to allow access to the bucket securely via https over the internet. The bucket policy would need to allow specific designated Elastic IP addresses on the new account to have access to the data in the bucket. It is preferred to use an Elastic IP address over an auto-allocated public IP, as they are persistent and reserved until they are released, ensuring that restricted access to the data is never compromised. The EIPs on the new account can be either associated with specific instances, allowing for granular access, or they can be the external IP of a NAT Gateway. Using the IP of the NAT Gateway allows for multiple instances or services to reach the S3 bucket using only one bucket policy.

The last way to copy the data is to use the AWS DataSync Service. The DataSync Service would require that a DataSync Agent EC2 instance be stood up in one of the accounts. This agent is then registered with the DataSync Service in the other account. Once the communication is established, source and destination locations can be setup on each account, and tasks set up to copy the data. One advantage of this method is the service can also be used to move data in NFS, SMB, and HDFS, as well as several other AWS services.

Summary

The very secure and heavily regulated nature of the AWS GovCloud makes the migration to AWS Commercial Cloud challenging. The two partitions are designed to be isolated from each other in every way, including VPC, Networking, IAM Users and Roles, and all other AWS services. Alpha Omega’s Cloud Center of Excellence (CCOP) played a critical role in researching and applying solutions to migration challenges. Our CCOP’s investments in cloud upskilling of our cloud resources and AWS partnership progression has enabled our architects to solve many such challenges for our federal customers in their journey to cloud.